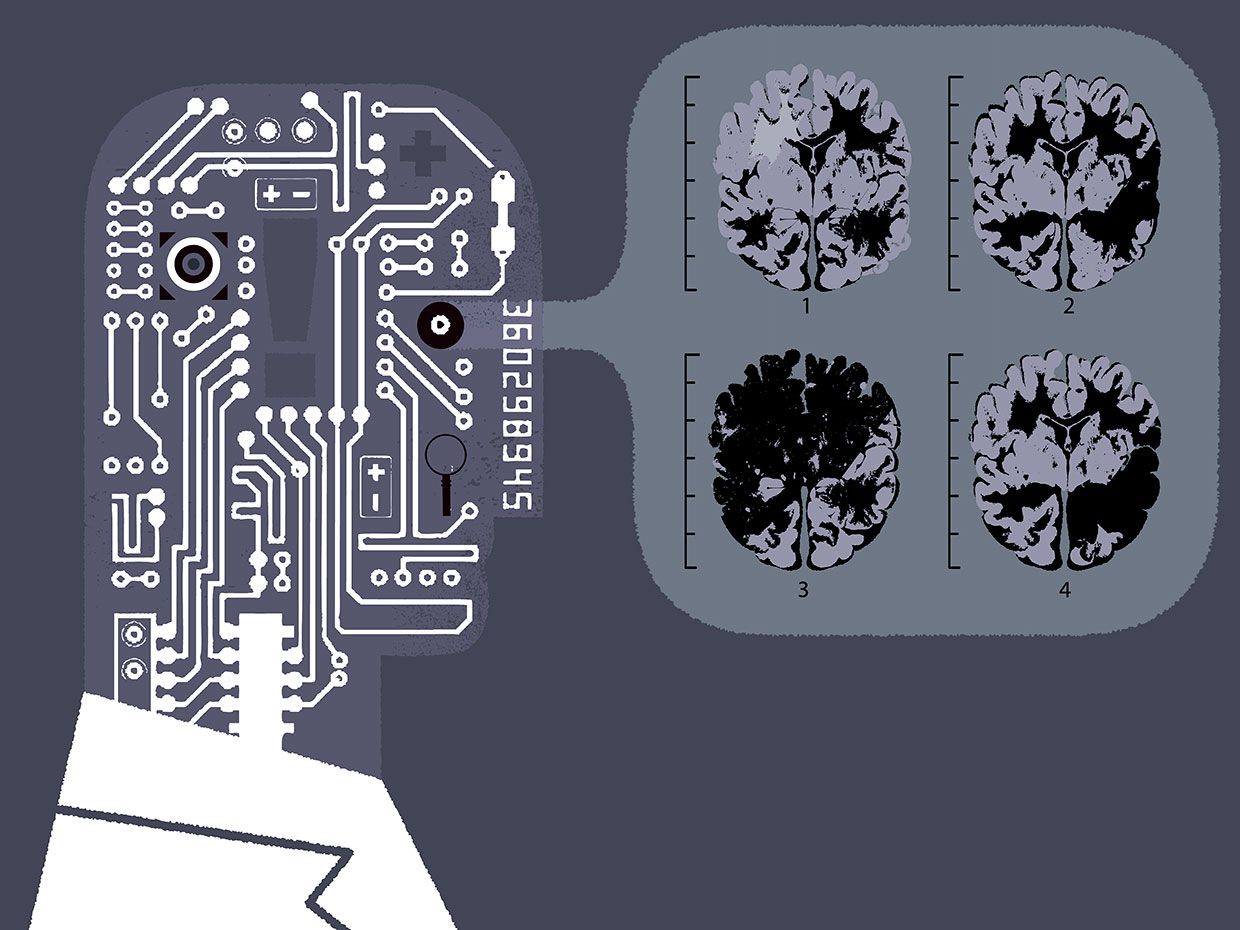

Last June, a team at Harvard Medical School and MIT showed that it’s pretty darn easy to fool an artificial intelligence system analyzing medical images.

The medical industry and AI systems can prepare for adversarial attacks with thoughtful approaches.

Jonathan Zittrain comments on planning smart. “We shouldn't rush to anticipate every terrible thing that can happen with a new technology, but rather release and iterate as we learn.” Trying to anticipate and prevent all possible adversarial attacks on a medical AI system could cripple rollout, delaying the good a system might do, such as diagnosing patients in rural areas who lack access to disease specialists.